Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

If you need support with IDC or have any questions, please open a new topic in IDC User Forum (preferred) or send email to [email protected].

Would you rather discuss your questions in an meeting with an expert from the IDC team? Book a 1-on-1 support session here: https://tinyurl.com/idc-help-request

If you are an NIH-funded investigator, you can join the that offers significant discounts on the use of cloud resources, and free training courses and materials on the use of the cloud.

If you need support with IDC or have any questions, please open a new topic in (preferred) or send email to [email protected].

Would you rather discuss your questions in an meeting with an expert from the IDC team? Book a 1-on-1 support session here:

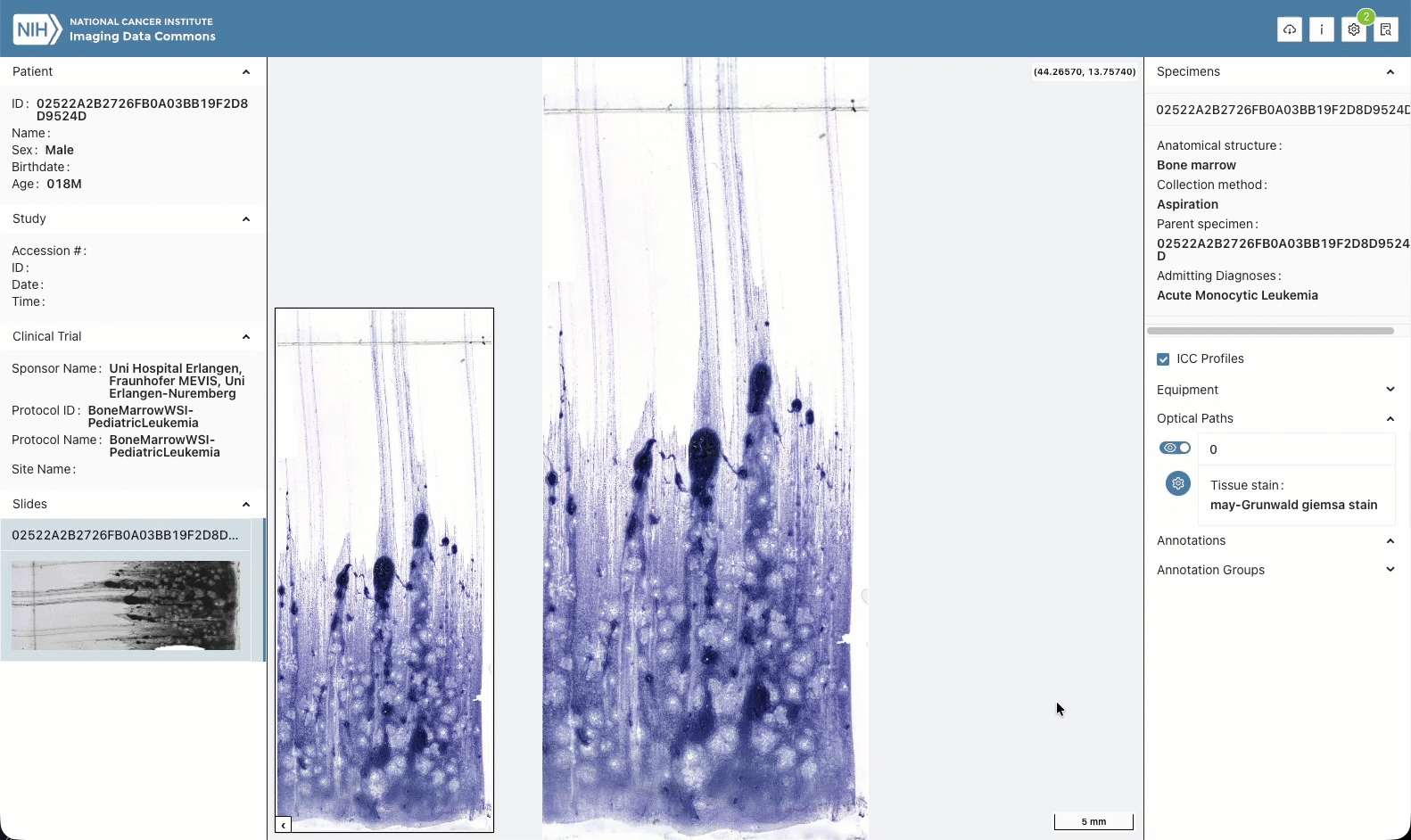

is a cloud-based environment containing publicly available cancer imaging data co-located with analysis and exploration tools. IDC is a node within the broader NCI infrastructure that provides secure access to a large, comprehensive, and expanding collection of cancer research data.

>95 TB of data: IDC contains radiology, brightfield (H&E) and fluorescence slide microscopy images, along with image-derived data (annotations, segmentations, quantitative measurements) and accompanying clinical data

free: all of the data in IDC is publicly available: no registration, no access requests

commercial-friendly: >95% of the data in IDC is covered by the permissive CC-BY license, which allows commercial reuse (small subset of data is covered by the CC-NC license); each file in IDC is tagged with the license to make it easier for you to understand and follow the rules

cloud-based: all of the data in IDC is available from both Google and AWS public buckets: fast and free to download, no out-of-cloud egress fees

harmonized: all of the images and image-derived data in IDC is harmonized into standard DICOM representation

IDC is as much about data as it is about what you can do with the data! We maintain and actively develop a variety of tools that are designed to help you efficiently navigate, access and analyze IDC data:

exploration: start with the IDC Portal to get an idea of the data available

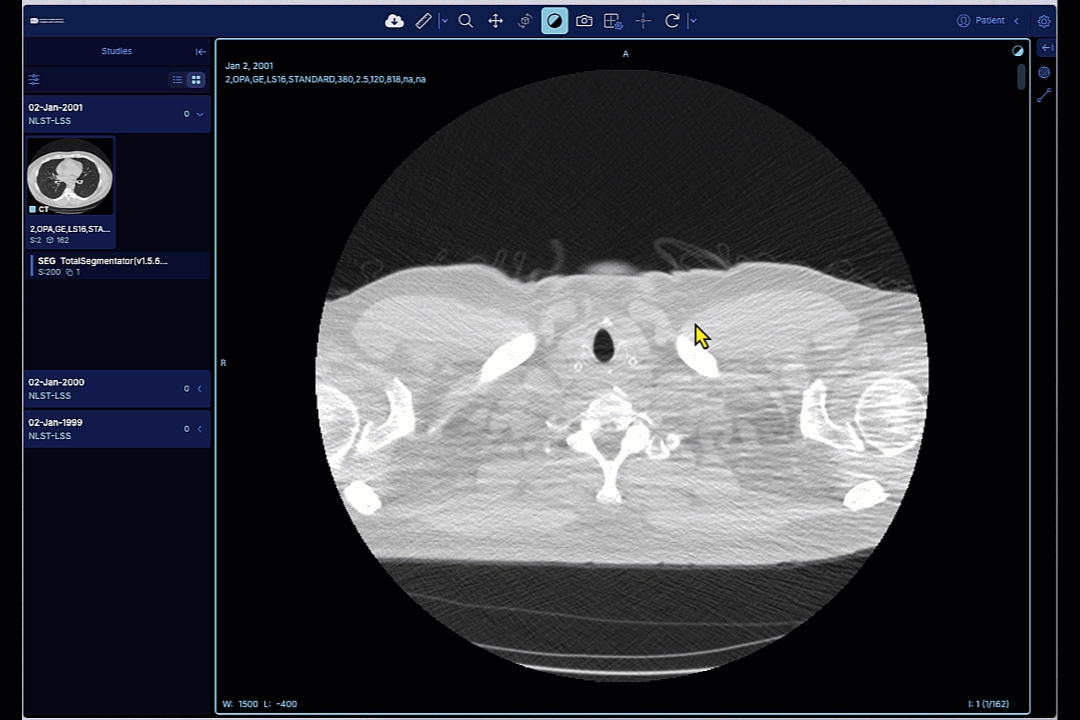

visualization: examine images and image-derived annotations and analysis results from the convenience of your browser using integrated OHIF, VolView and Slim open source viewers

programmatic access: use idc-index python package to perform search, download and other operations programmatically

cohort building: use rich and extensive metadata to build subsets of data programmatically using idc-index or BigQuery SQL

download: use your favorite S3 API client or idc-index to efficiently fetch any of the IDC files from our public buckets

analysis: conveniently access IDC files and metadata from the tools that are cloud-native, such as Google Colab or Looker; fetch IDC data directly into 3D Slicer using

We want Imaging Data Commons to be your companion in your cancer imaging research activities - from discovering relevant data to sharing your analysis results and showcasing the tools you developed!

Check out quick instructions on how to access and use IDC Portal web application that will help you search, subset and visualize data available in IDC.

IDC Portal is integrated with powerful visualization tools: just with your web browser you will be able to see IDC images and annotations using OHIF Viewer, Slim viewer and VolView!

We have many tools to help you search data in IDC, so that you download only what you need!

you can do basic filtering/subsetting of the data using IDC Portal, but if you are developer, you will want to learn how to use for programmatic access. will introduce you to the basics of idc-index for interaction with IDC content.

search clinical data: many of the IDC collections are accompanied by clinical data, which we parsed for you into searchable tabular representation - no need to download or parse CSV/Excel/PDF files! Dive into searching clinical data using .

if advanced content does not scare you, check out to learn how to search all of the metadata accompanying IDC using SQL and Google BigQuery.

We provide various tools for downloading data from IDC, as discussed in the . Access to all data in IDC is free! No registration. No access request forms. No logins.

once you have idc-index python package installed, download from the command line is as easy as running idc download <manifest_file>, or idc download <collection_id>.

looking for an interactive "point-and-click" application? is for you (note that you will only be able to visualize radiology - not microscopy - images in 3D Slicer)

We want to make it easier to understand performance of the latest advances in AI on real-world cancer imaging data!

if you have a Google account, you have free access to Google Colab, which allows you to run python notebooks on cloud VMs equipped with GPU - for free! Combined with idc-index for data access, this makes it rather easy to experiment with the latest AI tools! As an example, take a look at that allows you to apply MedSAM model to IDC data. You will find a growing number of notebooks to help you use IDC in .

use IDC to develop HuggingFace spaces that demonstrate the power of your models on real data: see we developed for SegVol

growing number of AI medical imaging models is being curated on the platform; see to learn how to apply those models on data from IDC

How about accompanying your next publication by a working demonstration notebook on relevant samples from IDC? You can see an example how we did this in .

With the cloud, you can do things that are simply impossible to do with your local resources.

read to learn how we applied TotalSegmentator+pyradiomics to >126,000 of CT scans of the NLST collection using Terra platform, completing the analysis in ~8 hours with the total cost ~$1000

contains the code we used in the above (this is really advanced content!)

If you have an algorithm, that you evaluated/published, that can enrich data in IDC with analysis results and you want to contribute those, or if you are a domain expert and would like to publish results of manual annotations you prepared - we want to hear from you!

IDC maintains a where we curate contributions of analysis results and other datasets produced by IDC (see the as one example of such contribution)

through a dedicated Zenodo record you will have a citation and DOI to get credit for your work; your data is ingested from Zenodo into IDC, and citation will be generated for the users of your data in IDC

once your data is in IDC, it should be easier to discover it, combine with other datasets, visualize and use from analysis workflows (as an example, see accompanying the RMS annotations)

If you need support with IDC or have any questions, please open a new topic in (preferred) or send email to [email protected].

Would you rather discuss your questions in an meeting with an expert from the IDC team? Book a 1-on-1 support session here:

Discourse (community forum):

Documentation:

GitHub organization:

Tutorials:

If you did not find the images you need in IDC, you can consider the following resources:

: while most of the public DICOM collections from TCIA are available in IDC, we do not replicate limited access TCIA collections

: list curated by Stephen Aylward

: list curated by University College London

: list curated by New York Univestity Health Sciences Library

We ingest and distribute datasets from variety of sources and contributors, primarily focusing on large data collection initiatives sponsored by US National Cancer Institute.

At this time, we do not have resources to prioritize receipt of the imaging data from individual PIs (but we are encouraging submissions of annotations/analysis results for existing IDC data!). Nevertheless, if you feel you might have a compelling dataset, please email us at [email protected].

On ingestion, we harmonize images and image-derived data into DICOM format for interoperability, whenever data is represented in a non-DICOM format.

Upon conversion, the data undergoes Extract-Transform-Load (ETL), which extracts DICOM metadata to make the data searchable, ingests the DICOM files into public S3 storage buckets and a DICOMweb store. Once the data is released, we provide various interfaces to access data and metadata.

We are actively developing a variety of capabilities to make it easier for the users to work with the data in IDC. Some of the examples of those tools include

provides interactive browser-based interface for exploration of IDC data

we are the maintainers of - an open-source viewer of DICOM digital pathology images; Slim is integrated with IDC Portal for visualizing pathology images and image-derived data available in IDC

we are actively contributing to the , and rely on it for visualizing radiology images and image-derived data

is a python package that provides convenience functions for accessing IDC data, including efficient download from IDC public S3 buckets

We welcome you to apply to contribute analysis results and annotations of the images available in IDC! These can be expert manual annotations, analysis results generated using AI tools, segmentations, contours, metadata attributes describing the data (e.g., annotation of the scan type), expert evaluation of the quality of existing AI-generated annotations in IDC.

If you would like your annotations/analysis results to be considered, you must establish the value of your contribution (e.g., describe the qualifications of the experts performing manual annotations, demonstrate robustness of the AI tool you are applying to images with a peer-reviewed publication or other type of evidence), and be willing to share your contribution under a permissive Creative Commons Attribution .

See more details on our curation policy , and reach out by sending email to with any questions or inquries. Every application will be reviewed by IDC stakeholders.

If your contribution is accepted by the IDC stakeholders:

we will work with you to choose the appropriate DICOM object type for your data and convert it into DICOM representation

upon conversion, we will create a Zenodo entry under the for your contribution so that you get the Digital Object Identifier (DOI), citation and recognition of your contribution

once published in IDC

your data will become searchable and viewable in IDC Portal, so it is easier for the users of your data to discover and work with your data

IDC is a component of the broader NCI , giving you access to the following:

can be used to find data related to the images in IDC in , and

Broad and (SB-CGC) can be used to apply analysis tools to the data in IDC (you can read more about how this can be done in from the IDC team)

platform curates a growing number of cancer imaging AI models that can be applied directly to the DICOM data available in IDC

IDC V14 introduced important enhancements to IDC data organization. The discussion of the organization of data in earlier versions is preserved here.

The following white papers are intended to provide explanation and clarification into applying DICOM to encoding specific types of data.

Comments and questions regarding those white papers are welcomed from the community! Please ask any related questions on IDC Discourse, or by adding comments directly in the documents referenced below:

Items in this section capture documentation relevant to organization of data in prior versions of IDC. Those are no longer relevant for the current data organization, and are preserved since the prior versions of data are still available to IDC users.

IDC API v1 has been released with the IDC Production release (v4).

3D Slicer extensions SlicerIDCBrowser can be used for interactive download of IDC data

we are contributing to a variety of tools that aim to simplify the use of DICOM in cancer imaging research; these include OpenSlide and BioFormats bfconvert library that can be used for conversion between DICOM Whole Slide Imaging (WSI) format and other slide microscopy formats, dcmqi library for converting image analysis results to and from DICOM representation

files can be downloaded very efficiently using S3 interface and idc-index

You can download data at the patient/case, DICOM study or series levels directly from the IDC Portal interface, as demonstrated below!

The Imaging Data Commons Portal provides a web-based interactive interface to browse the data hosted by IDC, visualize images, build manifests describing selected cohorts, and download images defined by the manifests.

The slides below give a quick guided overview of how you can use IDC Portal.

No login is required to use the portal, to visualize images, or to download data from IDC!

Imaging Data Commons team has been funded in whole or in part with Federal funds from the National Cancer Institute, National Institutes of Health, under Task Order No. HHSN26110071 under Contract No. HHSN261201500003l.

We gratefully acknowledge and the that support public hosting of IDC-curated content, and cover out-of-cloud egress fees!

Several of the members of the IDC team utilize compute resources supported via the

IDC Portal offers lots of flexibility in selecting items to download. In all cases, download of data from IDC Portal is a two step process:

Select items and export a manifest corresponding to your selection.

Use command-line python tool or 3D Slicer IDC browser extension to download the files for your selection, as discussed in .

IDC supports a variety of interfaces for fetching individual images, cohorts (groups of images), or portions of images, using desktop application, command-line interface, or programmatic API. These interfaces are covered in the subsequent pages. You should select the specific approach to accessing IDC data depending on your requirements.

Download directly from : no prerequisites other than a Chrome web browser!

DICOM defines its own model to map relevant entities from the real world. That model, as , is shown in the figure below.

The DICOM data model is implicit, and is not defined in a machine-readable structured form by the standard!

DICOM data model entities do not always map to DICOM objects! In fact, every DICOM object you will ever encounter in IDC will contain attributes describing various properties of the entries at different levels of the real world data model. Such objects are called Composite Information Objects. The of the Composite Information Object Definitions is shown below, and covers all of the composite objects defined by the standard.

DICOM and TIFF are two different image file formats that share many similar characteristics, and are capable of encoding exactly the same pixel data, whether uncompressed, or compressed with common lossy schemes (including JPEG and JPEG 2000). This allow the pixel data to be losslessly transformed from one format to the other and back.

The DICOM file format was also deliberately designed to allow the two formats (TIFF and DICOM) to peacefully co-exist in the same file, sharing the same pixel data without expanding the file size significantly. This is achieved by leaving some unused space at the front of the DICOM file ("preamble"), which allows for the presence of a TIFF format recognition code ("magic number") and a pointer to its Image File Directory (IFD), which in turn contains pointers into the shared DICOM Pixel Data element.

The dual-personality mechanism supports both traditional strip-based TIFF organization, such as might be used to encode a single frame image, as well as the tile-based format, which is commonly used for Whole Slide Images (WSI), and which is encoded in DICOM with each tile as a frame of a "multi-frame" image.

Unlike TIFF files, which allow multiple different sized images to be encoded in the same file, DICOM does not, so there are limits to this approach. For example, though an entire WSI pyramid can be encoded in a TIFF file, the DICOM WSI definition requires each pyramid layer to be in a separate file, and all frames (tiles) within the same file to be the same size.

Most of the structural metadata that describes the organization and encoding of the pixel data is similar in DICOM and TIFF. It is copied into the tags (data elements) encoded in the respective format "headers". Biomedical-specific information, such as patient, specimen and anatomical identifiers and descriptions, as well as acquisition technique, is generally only encoded in the DICOM data elements, their being no corresponding standard TIFF tags for it. Limited spatial information (such as physical pixel size) can be encoded in TIFF tags, but more complex multi-dimensional spatial location is standardized only in the DICOM data elements.

Topic-specific dashboards

The primary mechanism for accessing data from IDC is by searching the metadata using the idc-index python package or BigQuery tables, and downloading the binary files from public cloud buckets, as discussed in . There is no limit, quota or fees associated with downloading IDC files from the buckets.

Effective March 2024, as a pilot project, IDC also provides access to the DICOM data via the DICOMweb interface available at this endpoint: . This endpoint is read-only. It will route the requests to the Google Healthcare API DICOM store containing IDC data.

Our DICOMWeb endpoint should only be used when data access needs cannot be satisfied using other mechanisms (e.g., when accessing individual frames of the microscopy images without having to download the entire binary file).

Egress of data via the DICOMweb interface is capped at a non-disclosed limit that is tracked per IP. It is not acceptable to “IP hop” in an attempt to circumvent individual daily quotas, since there is also a global daily cap as well to prevent full egress of the imaging collection. Note that if this global cap is hit, all other users of the site would be unable to use the viewers for the rest of the day (using the UTC clock). Thus, IP hopping against the proxy that causes the global quota to be hit will be considered a denial-of-service attack.

As of 15-July-2025, support for user defined cohorts has been removed from the both the IDC API V1 and the IDC API V2. The API documentation has been revised accordingly.

This section describes version 2 of the IDC REST API . The documentation for the version 1 API will be found .

This API is designed for use by developers of image analysis and data mining tools to directly query the public resources of the IDC and retrieve information into their applications. The API complements the IDC web application but eliminates the need for users to visit the IDC web pages to perform manifest export, and transfer of image data to some local file system.

The IDC API conforms to the specification which "defines a standard, language-agnostic interface to RESTful APIs which allows both humans and computers to discover and understand the capabilities of the service without access to source code, documentation, or through network traffic inspection."

Note: As of 15-July-2025, support for user defined cohorts has been removed from the both the IDC API V1 and the IDC API V2. The API documentation has been revised accordingly.

This section describes v1 of the IDC REST API . This API is designed for use by developers of image analysis and data mining tools to directly query the public resources of the IDC and retrieve information into their applications. The API complements the IDC web application but eliminates the need for users to visit the IDC web pages to perform cohort creation, manifest export, and transfer of image data to some local file system.

The IDC API conforms to the specification which "defines a standard, language-agnostic interface to RESTful APIs which allows both humans and computers to discover and understand the capabilities of the service without access to source code, documentation, or through network traffic inspection."

This section of the documentation complements the tutorials available in our notebooks repository:

: all of the pathology images in IDC are in DICOM Slide Microscopy format; this notebook will help you get started with using this representation and also searching IDC pathology images.

: introduction to the key metadata accompanying IDC slide microscopy images that can be used for subsetting data and building cohorts.

In the following subsections you will find notebooks that don't require python programming, or have dependencies that make them not suitable for the python notebook format.

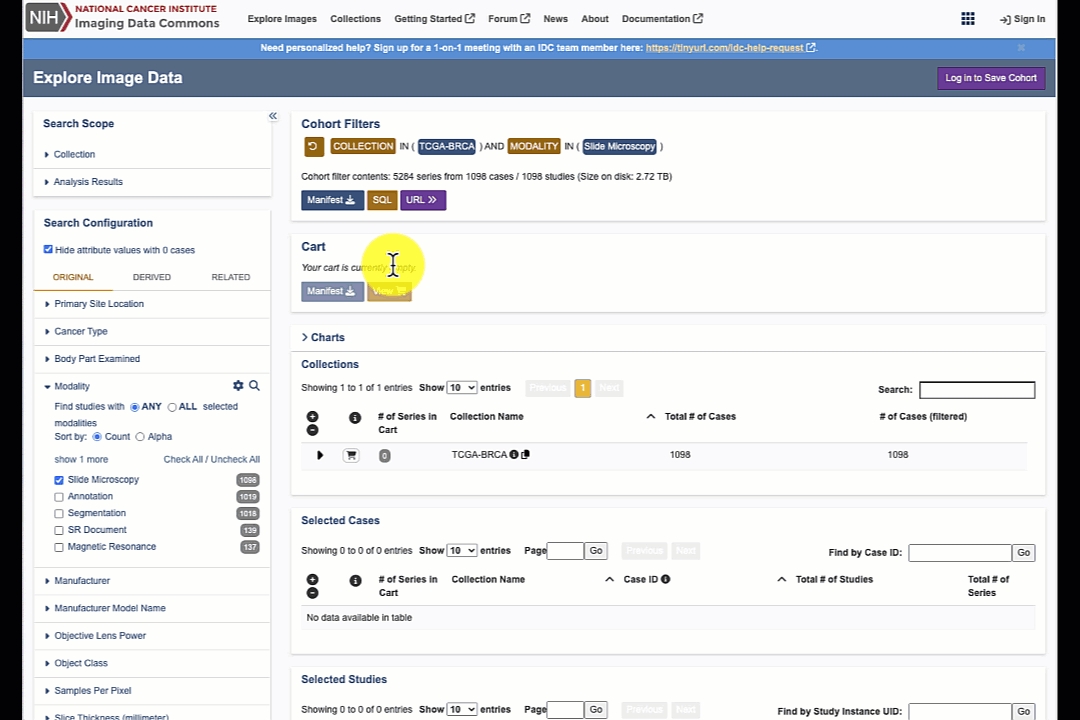

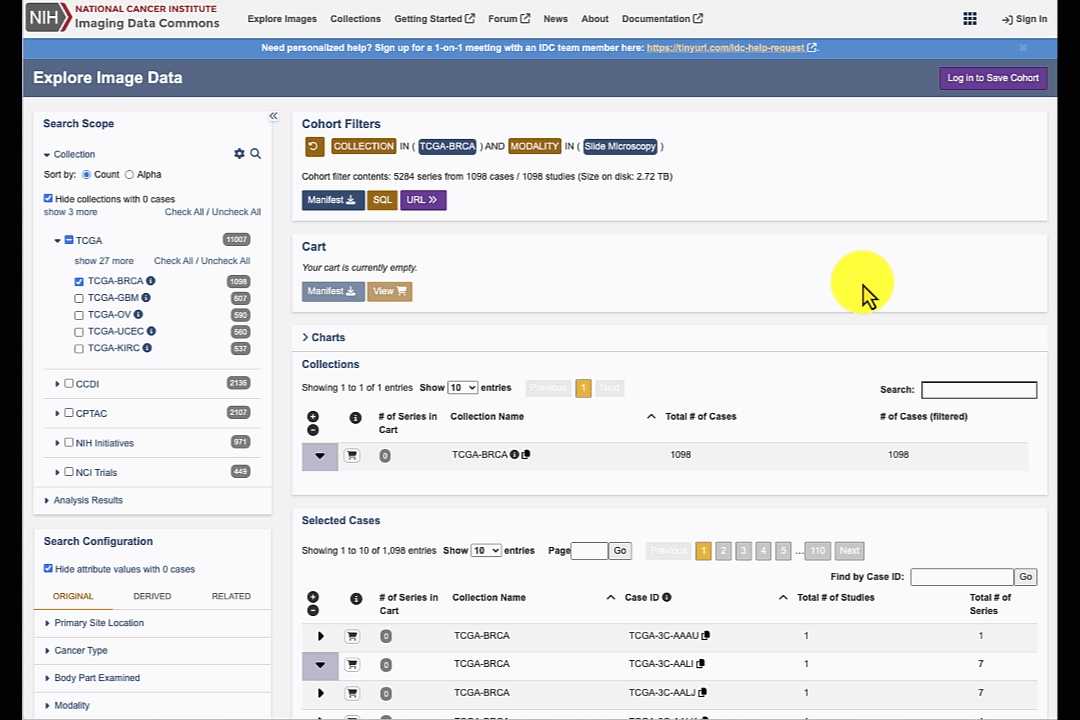

Search results are updated dynamically based on the search configuration. At any time you can expand the items on the right to explore the selected collections, cases, studies and series.

Studies and series tables include the button to open those in the browser-based image viewer.

See IDC API endpoint details at .

is a free tool that turns your data into informative, easy to read, easy to share, and fully customizable dashboards and reports.

In this section you can learn how to very quickly make a custom Looker Studio dashboard to explore the content of your cohort, and find some additional examples of using Looker Studio for analyzing content of IDC.

are components of the that bring data and computational power together to enable cancer research and discovery.

Our current experience in using NCI Cloud Resources for cancer image analysis is summarized in the following preprint:

Thiriveedhi, V. K., Krishnaswamy, D., Clunie, D., Pieper, S., Kikinis, R. & Fedorov, A. Cloud-based large-scale curation of medical imaging data using AI segmentation. Research Square (2024). doi:

Since IDC data is available via standard interfaces, you can use any of the tool supporting those interfaces to access the data. This page provides pointers to some of such tools that you might find useful.

If you are aware of any other tool that is not listed here, but is helpful for accessing IDC data, please let us know on the , and we will be happy to add it here!

: open-source Python interface to ,

This section contains various recipes that might be useful in utilizing GCP Compute Engine (GCE).

You are also encouraged to review the slides in the following presentation that provides an introduction into GCE, and shares some best practices the its usage.

W. Longabaugh. Introduction to Google Cloud Platform. Presented at MICCAI 2021. ()

The dictionary of TIFF tags can be extended with application-specific entries. This has been done for various non-medical and medical applications (e.g., GeoTIFF, DNG, DEFF). Other tools have used alternative mechanisms, such as defining text string (Leica/Aperio SVS) or structured metadata in other formats (such as XML for OME) buried within a TIFF string tag (e.g, ImageDescription). This approach can be used with DICOM-TIFF dual-personality files as well, since DICOM does not restrict the content of the TIFF tags; it does require updating or crafting of the textual metadata to actually reflect the characteristics of the encoded pixel data.

It is hoped that the dual-personality approach may serve to mitigate the impact of limited support of one format or the other in different clinical and research tools for acquisition, analysis, storage, indexing, distribution, viewing and annotation.

idc-index interface: command-line and Python API interface to download images corresponding to the specific patient/study/series, or a cohort defined by a manifest

3D Slicer interface: desktop application to download images corresponding to the specific patient/study/series, or a cohort defined by a manifest

s5cmd: command-line interface to download images for a cohort defined by a manifest (unlike idc-index, does not organize downloaded images into folders corresponding to IDC data model hierarchy)

DICOMweb interface: REST API interface to access both metadata and pixel data at the granularity of image frames/tiles

Directly loading DICOM objects from Google Cloud or AWS in Python: Python API interface to access both metadata and pixel data at the granularity of image frames/tiles

Program- and Collection-specific dashboards

LIDC-IDRI collection dashboard (see details in this paper)

If you reach your daily quota, but feel you have a compelling cancer imaging research use case to request an exception to the policy and an increase in your daily quota, please reach out to us at [email protected] to discuss the situation.

We are continuously monitoring the usage of the proxy. Depending on the actual costs and usage, this policy may be revisited in the future to restrict access via the DICOMweb interface for any uses other than IDC viewers.

If you have feedback about the desired features of the IDC API, please let us know via the IDC support forum.

The API is a RESTful interface, accessed through web URLs. There is no software that an application developer needs to download in order to use the API. The application developer can build their own access routines using just the API documentation provided. The interface employs a set of predefined query functions that access IDC data sources.

The IDC API is intended to enable exploration of IDC hosted data without the need to understand and use the Structure Query Language (SQL). To this end, data exploration capabilities through the IDC API are limited. However, IDC data is hosted using the standard capabilities of the the Google Cloud Platform (GCP) Storage (GCS) and BigQuery (BQ) components. Therefore, all of the capabilities provided by GCP to access GCS storage buckets and BQ tables are available for more advanced interaction with that data.

SwaggerUI is a web based interface that allows users to try out APIs and easily view their documentation. You can access the IDC API SwaggerUI here.

This Google Colab notebook serves as an interactive tutorial to accessing the IDC API using Python.

The API is a RESTful interface, accessed through web URLs. There is no software that an application developer needs to download in order to use the API. The application developer can build their own access routines using just the API documentation provided. The interface employs a set of predefined query functions that access IDC data sources.

The IDC API is intended to enable exploration of IDC hosted data without the need to understand and use the Structure Query Language (SQL). To this end, data exploration capabilities through the IDC API are limited. However, IDC data is hosted using the standard capabilities of the the Google Cloud Platform (GCP) Storage (GCS) and BigQuery (BQ) components. Therefore, all of the capabilities provided by GCP to access GCS storage buckets and BQ tables are available for more advanced interaction with that data.

SwaggerUI is a web based interface that allows users to try out APIs and easily view their documentation. You can access the IDC API SwaggerUI here.

This Google Colab notebook serves as an interactive tutorial to accessing the IDC API using Python.

You will see "Cart" icon in the search results collections/cases/studies/series tables. Any of the items in these tables can be added to the cart for subsequent downloading of the corresponding files.

Get the manifest for the cart content using "Manifest" button in the Cart panel.

Clicking "Manifest" button in the "Cohort Filters" panel will given you the manifest for all of the studies that match your current selection criteria.

Studies table contains a button for downloading manifest that will contain references to the files in the given study. To download a single series, no manifest is needed. You will see the command line to run to do the download.

If you would like to download the entire study, or the specific image you see in the image viewer, you can use the download button in the viewer interface.

As can be observed from this diagram, "each Composite Instance IOD [Entity-Relationship] Model requires that all Composite Instances that are part of a specific Study shall share the same context. That is, all Composite Instances within a specific Patient Study share the same Patient and Study information; all Composite Instances within the same Series share the same Series information; etc." (ref).

Each of the boxes in the diagram above corresponds to Information Entities (IEs), which in turn are composed from Information Modules. Information Modules group attributes that are related. As an example, Patient IE included in the MR object will include Patient Information Module, which in turn will include such attributes as PatientID, PatientName, and PatientSex.

Click on the "i" button to toggle information panel about the individual items in the search panels

Cohort filters panel: get the shareable URL for the current selection by clicking "URL" button in the Cohort Filters panel

Get the manifest for downloading all of the matching studies by clicking "Manifest" button in the Cohort Filters panel

You can copy identifiers of the individual collections, cases, studies or series to the clipboard - those can be used to download corresponding files as discussed in the Downloading data section - using command-line download tool or 3D Slicer IDC extension

IDC and TCIA are partners in providing FAIR data for cancer imaging researchers.

TCIA provides unique service to work with data submitters to de-identify cancer imaging data and make it available for download.

The mission of IDC is to support efficient access and use of the cancer imaging data, after it was de-identified and released.

Here are some of the highlights that make IDC unique:

Unique datasets: while all of the public TCIA DICOM collections are available in IDC, there is a growing amount of data in IDC that is not available anywhere else:

DICOM digital pathology collections from prominent initiatives: Childhood Cancer Data Initiative (CCDI), GTEx, TCGA, CPTAC, HTAN, CMB

image analysis results available only from IDC, such as TotalSegmentator segmentations and radiomics features for most of the CT images in the NLST collection

Cloud-native: IDC makes the data available in public cloud buckets, the egress is free (TCIA provides download from on-premises servers at a single institution): chances are your will be able to download data from IDC much faster than from TCIA

Partnerships with cloud vendors: IDC collaborates with Public Datasets Programs of Amazon Web Services and Google Cloud to support hosting and free out-of-cloud egress, contributing to improved accessibility, sustainability and longevity of the resource

State of the art tools: IDC maintains superior community recognized tools to support the use of the data:

modern OHIF Viewer v3 for radiology data, with support of visualization of annotations and segmentations;

Slim viewer for digital pathology and annotations

highly capable IDC Portal

Standard access interfaces: IDC offers standard interfaces for data access: S3 API for file download, DICOMweb for interoperability with DICOM tools, SQL for searching all of the DICOM metadata (TCIA offers various non-standard, in-house interfaces and APIs for data access)

Harmonized data: All of the data (radiology and digital pathology images, annotations, segmentations, image-derived features) available in IDC is harmonized into DICOM representation, which means

interoperability: you can use IDC data with any DICOM-compatible tool

metadata: every single file in IDC is accompanied by metadata that follows DICOM data model, and is associated with unique identifiers, allowing you to build reproducible cohorts

uniform representation: you don't need to customize your processing pipelines to a specific collection, and can build cohorts combining data across collections

Co-location with cloud compute resources: IDC data is easier to access from cloud computing resources, allowing you to more easily experiment with the new analysis tools and scale your computation

Versioning: IDC data is versioned: you will be able to access the exact files you analyzed in a given verison of IDC even if there were any updates to the collection after you accessed it, helping you achieve reproducibility of your analyses

Open-source tool stack: all of the tools developed by IDC are shared under permissive licenses to support community contribution, reuse and sustainability

Check out the documentation page!

Note that currently IDC prioritizes submissions from NCI-funded driving projects and data from special selected projects.

If you would like to submit images, it will be your responsibility to de-identify them first, documenting the de-identification process and submitting that documentation for the review by IDC stakeholders.

We welcome submissions of image-derived data (expert annotations, AI-generated segmentations) for the images already in IDC, see IDC Zenodo community to learn about the requirements for such submissions!

IDC works closely with and mirrors TCIA public collections. If you submit your DICOM data to TCIA and your data is released as a public collection, it will be automatically available in IDC in a following release.

If you are interested in making your data available within IDC, please contact us by sending email to .

IDC data is stored in the cloud buckets, and you can search and for free and without login.

If you would like to use the cloud for analysis of the data, we recommend you start with the free tier of to get free access to a cloud-hosted VM with GPU to experiment with analysis workflows for IDC data. If you are an NIH-funded researcher, you may be eligible for a free allocation via . US-based researchers can also access free cloud-based computing resources via .

IDC pilot release took place in Fall 2020, followed by the production release in September 2021. IDC team is continuously refining the capabilities of IDC Portal and various tools, and publishes new data releases every 3-4 months.

We host most of the public collections from . We also host HTAN and other pathology images not hosted by TCIA. You can review the complete, up-to-date list of .

Please cite the latest paper from the IDC team. Please also make sure you acknowledge the specific data collections you used in your analysis.

Fedorov, A., Longabaugh, W. J. R., Pot, D., Clunie, D. A., Pieper, S. D., Gibbs, D. L., Bridge, C., Herrmann, M. D., Homeyer, A., Lewis, R., Aerts, H. J. W. L., Krishnaswamy, D., Thiriveedhi, V. K., Ciausu, C., Schacherer, D. P., Bontempi, D., Pihl, T., Wagner, U., Farahani, K., Kim, E. & Kikinis, R. National cancer institute imaging data commons: Toward transparency, reproducibility, and scalability in imaging artificial intelligence. Radiographics 43, (2023).

The main website for the Cancer Research Data Commons (CRDC) is

Clinical data that was shared by the submitters is available for a number of imaging collections in IDC. Please see on how to search that data and how to link clinical data with imaging metadata!

Many of the imaging collections are also accompanied by the genomics or proteomics data. CRDC provides the API to locate such related datasets.

IDC Portal gives you access to just a small subset of the metadata accompanying IDC images. If you want to learn more about what is available, you have several options:

from our Getting Started tutorial series explains how to use - a python package that aims to simplify access to IDC data

will help you get started with searching IDC metadata in BigQuery, which gives you access to all of the DICOM metadata extracted from IDC-hosted files

if you are not comfortable writing queries or coding in pyhon, you can use to search using some of the attributes that are not available through the portal. You can also to include additional attributes.

IDC relies on DICOM data model for organizing images and image-derived data. At the same time, IDC includes certain attributes and data types that are outside of the DICOM data model. The Entity-Relationship (E-R) diagram and examples below summarize a simplified view of the IDC data model (you will find the explanation of how to interpret the notation used in this E-R diagram in this page from Mermaid documentation).

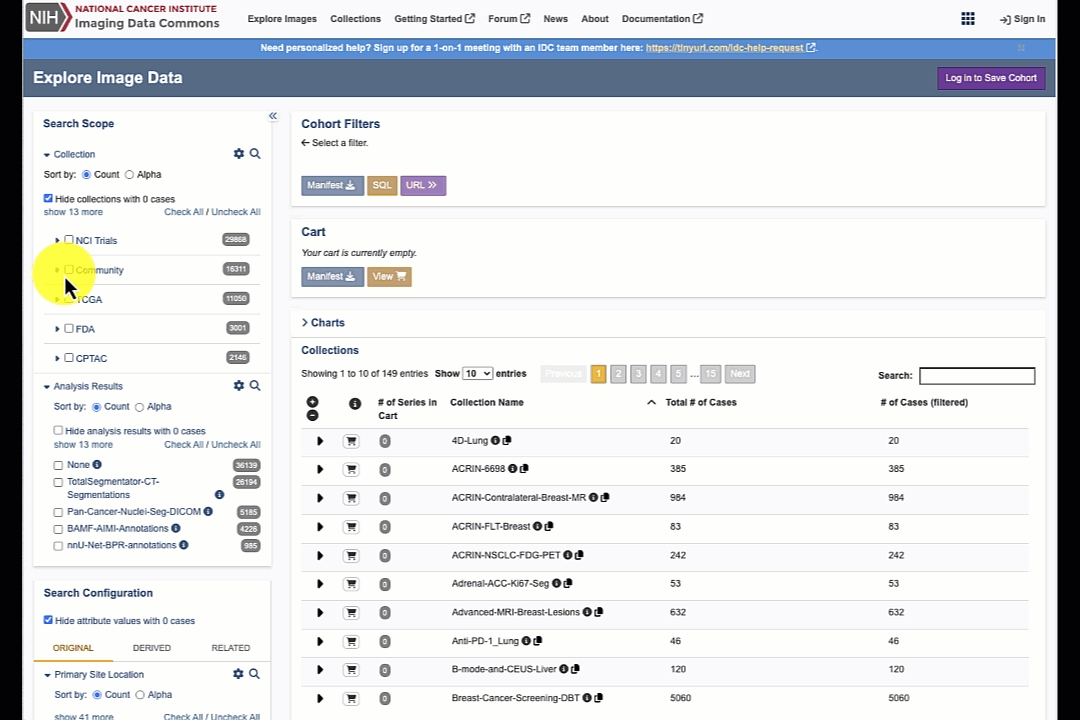

IDC content is organized in Collections: groups of DICOM files that were collected through certain research activity.

Collections are organized into Programs, which group related collections, or those collections that were contributed under the same funding initiative or a consortium. Example: TCGA program contains TCGA-GBM, TCGA-BRCA and other collections. You will see Collections nested under Programs in the upper left section of the IDC Portal. You will also see the list of collections that meet the filter criteria in the top table on the right-hand side of the portal interface.

Individual DICOM files included in the collection contain attributes that organize content according to the DICOM data model.

Each collection will contain data for one or more case, or patient. Data for the individual patient is organized in DICOM studies, which group images corresponding to a single imaging exam/enconter, and collected in a given session. Studies are composed of DICOM series, which in turn consist of DICOM instances. Each DICOM instance correspond to a single file on disk. As an example, in radiology imaging, individual instances would correspond to image slices in multi-slice acquisitions, and in digital pathology you will see a separate file/instance for each resolution layer of the image pyramid. When using IDC Portal, you will never encounter individual instances - you will only see them if you download data to your computer.

Analysis results collection is a very important concept in IDC. These contain analysis results that were not contributed as part of any specific collection. Such analysis results might be contributed by investigators unrelated to those that submitted the analyzed images, and may span images across multiple collections.

One of the fundamental principles of DICOM is the use of controlled terminologies, or lexicons, or coding schemes (for the purposes of this guide, these can be used interchangeably). While using the DICOM data stored in IDC, you will encounter various situations where the data is captured using coded terms.

Controlled terminologies define a set of codes, and sometimes their relationships, that are carefully curated to describe entities for a certain application domain. Consistent use of such terminologies helps with uniform data collection and is critical for harmonization of activities conducted by independent groups.

When codes are used in DICOM, they are saved as triplets that consist of

CodeValue: unique identifier for a term

CodingSchemeDesignator: code for the authority that issued this code

CodeMeaning: human-readable code description

DICOM relies on various sources of codes, all of which are listed in of the standard.

As an example, if you query the view with the following query in the BQ console:

You will see columns that contain coded attributes of the segment. In the example below, the value of AnatomicRegion corresponding to the segment is assigned the value (T-04000, SRT, Breast), where "SRT" is the coding scheme designator corresponding to the coding scheme.

As another example, quantitative and qualitative measurements extracted from the SR-TID1500 objects are stored in the and views, respectively. If we query those views to see the individual measurements, they also show up as coded items. Each of the quantitative measurements includes a code describing the quantity being measured, the actual numeric value, and a code describing the units of measurement:

DICOM SR uses data elements to encode a higher level abstraction that is a tree of content, where nodes of the tree and their relationships are formalized. SR-TID1500 is one of many standard templates that define constraints on the structure of the tree, and is intended for generic tasks involving image-based measurements. DICOM SR uses standard terminologies and codes to deliver structured content. These codes are used for defining both the concept names and values assigned to those concepts (name-value pairs). Measurements include coded concepts corresponding to the quantity being measured, and a numeric value accompanied by coded units. Coded categorical or qualitative values may also be present. In SR-TID1500, measurements are accompanied by additional context that helps interpret and reuse that measurement, such as finding type, location, method and derivation. Measurements computed from segmentations can reference the segmentation defining the region and the image segmented, using unique identifiers of the respective objects.

At this time, only the measurements that accompany regions of interest defined by segmentations are exposed in the IDC Portal, and in the measurements views maintained by IDC!

Open source DCMTK tool can be used to render the content of the DICOM SR tree in a human-readable form (you can see one example of such rendering ). Reconstructing this content using tools that operate with DICOM content at the level of individual attributes can be tedious. We recommend the tools referenced above that also provide capabilities for reading and writing SR-TID1500 content:

: high-level DICOM abstractions for the Python programming language

: open source DCMTK-based C++ library and command line converters that aim to help with the conversion between imaging research formats and the standard DICOM representation for image analysis results

: C++ library that provides API abstractions for reading and writing SR-TID1500 documents

Tools referenced above can be used to 1) extract qualitative evaluations and quantitative measurements fro the SR-TID1500 document; 2) generate standard-compliant SR-TID1500 objects.

We differentiate between the original and derived DICOM objects in the IDC portal and discussions of the IDC-hosted data. By Original objects we mean DICOM objects that are produced by image acquisition equipment - MR, CT, or PET images fall into this category. By Derived objects we mean those objects that were generated by means of analysis or annotation of the original objects. Those objects can contain, for example, volumetric segmentations of the structures in the original images, or quantitative measurements of the objects in the image.

Most of the images stored on IDC are saved as objects that store individual slices of the image in separate instances of a series, with the image stored in the PixelData attribute.

As of production release, IDC contains both radiology and digital pathology images. The following publication can serve as a good introduction into the use of DICOM for digital pathology.

Herrmann, M. D., Clunie, D. A., Fedorov, A., Doyle, S. W., Pieper, S., Klepeis, V., Le, L. P., Mutter, G. L., Milstone, D. S., Schultz, T. J., Kikinis, R., Kotecha, G. K., Hwang, D. H., Andriole, K. P., John Lafrate, A., Brink, J. A., Boland, G. W., Dreyer, K. J., Michalski, M., Golden, J. A., Louis, D. N. & Lennerz, J. K. Implementing the DICOM standard for digital pathology. J. Pathol. Inform. 9, 37 (2018).

Open source libraries such as DCMTK, GDCM, ITK, and pydicom can be used to parse such files and load pixel data of the individual slices. Recovering geometry of the individual slices (spatial location and resolution) and reconstruction of the individual slices into a volume requires some extra consideration.

: command-line tool to convert neuroimaging data from the DICOM format to the NIfTI format

: open source software for image computation, which includes

: python library providing API and command-line tools for converting DICOM images into NIfTI format

Indexing of the collection of NSCLC-Radiomics by the Data Commons Framework is pending.

QIN multi-site collection of Lung CT data with Nodule Segmentations: only items corresponding to the LIDC-IDRI original collection are included

DICOM SR of clinical data and measurement for breast cancer collections to TCIA: only items corresponding to the ISPY1 original collection are included

: Some of the segmentations in this collection are empty (as an example, SeriesNumber 42100 with SeriesDescription "VOI PE Segmentation thresh=70" in is empty).

Due to the existing limitations of Google Healthcare API, not all of the DICOM attributes are extracted and are available in BigQuery tables. Specifically:

sequences that have more than 15 levels of nesting are not extracted (see ) - we believe this limitation does not affect the data stored in IDC

sequences that contain around 1MiB of data are dropped from BigQuery export and RetrieveMetadata output currently. 1MiB is not an exact limit, but it can be used as a rough estimate of whether or not the API will drop the tag (this limitation was not documented as of writing this) - we know that some of the instances in IDC will be affected by this limitation. The fix for this limitation is targeted for sometime in 2021, according to the communication with Google Healthcare support.

IDC provides a variety of interfaces to access both the data (as files) and metadata (to subset files and build cohorts). The flow of data and the relationship between the various components IDC uses is summarized in the following figure.

We maintain the following resources to enable access to IDC data:

Cloud storage buckets: files maintained by IDC are mirrored between Google and AWS public storage buckets that provide fee-free egress without requiring login. The buckets organize files by DICOM series, each series stored in a separate folder. Given the large overall size of data in IDC, you will likely need to use one of the search interfaces to identify relevant series first.

BigQuery tables: collection-level metadata, DICOM metadata, clinical data tables available via SQL query interface.

Python API: pip-installable provides programmatic interface and command-line tools to search IDC data using most important metadata attributes, and to download files corresponding to the selected cohorts from the cloud buckets

: alternative language-independent API for selecting subsets of data

: DICOM files and metadata queries available from Google Healthcare DICOM stores

DICOM Radiotherapy Structure Sets (RTSS, or RTSTRUCT) define regions of interest by a set of planar contours.

RTSS objects can be identified by the RTSTRUCT value assigned to the Modality attribute, or by SOPClassUID = 1.2.840.10008.5.1.4.1.1.481.3.

If you use the IDC Portal, you can select cases that include RTSTRUCT objects by selecting "Radiotherapy Structure Set" in the "Original" tab, "Modality" section (filter link). Here is a sample study that contains an RTSS series.

As always, you get most of the power in exploring IDC metadata when using SQL interface. As an example, the query below will select a random study that contains a RTSTRUCT series, and return a URL to open that study in the viewer:

# get the viewer URL for a random study that

# contains RTSTRUCT modality

SELECT

ANY_VALUE(CONCAT("https://viewer.imaging.datacommons.cancer.gov/viewer/", StudyInstanceUID)) as viewer_url

FROM

`bigquery-public-data.idc_current.dicom_all`

WHERE

StudyInstanceUID IN (

# select a random DICOM study that includes an RTSTRUCT object

SELECT

StudyInstanceUID

FROM

`bigquery-public-data.idc_current.dicom_all`

WHERE

SOPClassUID = "1.2.840.10008.5.1.4.1.1.481.3"

ORDER BY

RAND()

LIMIT

1)RTSTRUCT relies on unstructured text in describing the semantics of the individual regions segmented. This information is stored in the StructureSetROISequence.ROIName attribute. The following query will return the list of all distinct values of ROIName and their frequency.

We recommend tool for converting planar contours of the individual structure sets into volumetric representation.

The following characteristics apply to all IDC APIs:

You access a resource by sending an HTTP request to the IDC API server. The server replies with a response that either contains the data you requested, or a status indicator.

An API request URL has the following structure: <BaseURL><API version><QueryEndpoint>?<QueryParameters>. For example, this curl command is a request for metadata on all IDC collections:

curl -X GET "https://api.imaging.datacommons.cancer.gov/v1/collections" -H "accept: application/json"

Authorization

Some of the APIs, such as /collections and /cohorts/preview, can be accessed without authorization. APIs that access user specific data, such as cohorts, necessarily require account authorization.

To access these APIs that require IDC authorization, you will need to generate a credentials file. To obtain your credentials:

Clone the to your local machine.

Execute the idc_auth.py script either through the command line or from within python. Refer to the idc_auth.py file for detailed instructions.

Example usage of the generated authorization is demonstrated by code in the Google Colab notebook.

Several IDC APIs, specifically /cohorts/manifest/preview, /cohorts/manifest/{cohort_id}, /cohorts/query/preview, /cohorts/query/{cohort_id}, and /dicomMetadata, are paged. That is, several calls of the API may be required to return all the data resulting from such a query. Each accepts a _page_size query parameter that is the maximum number of objects that the client wants the server to return. The returned data from each of these APIs includes a next_page value. next_page is null if there is no more data to be returned. If next_page is non-null, then more data is available.

There are corresponding queries, /cohorts/manifest/nextPage, /cohorts/query/nextPage, and /dicomMetadata/nextpage endpoints, that each accept two query parameters: next_page, and page_size. In the case that the returned next_page value is not null, the corresponding ../nextPage endpoint is accessed, passing the next_page token returned by the previous call.

The manifest and query endpoints may return an HTTP 202 error. This indicates that the request was accepted but processing timed out before it was completed. In this case the client should resubmit the request including the next_page token that was returned with the error response.

This page provides details on each of the IDC API endpoints.

The Imaging Data Commons Portal provides a web-based interactive interface to browse the data hosted by IDC, visualize images, build manifests describing selected cohorts, and download images defined by the manifests.

The slides below give a quick guided overview of how you can use IDC Portal.

No login is required to use the portal, to visualize images, or to download data from IDC!

Components on the left side of the page give you controls for configuring your selection:

Search scope allows you to limit your search to just the specific programs, collections and analysis results (as discussed in the documentation of the IDC Data model).

Search configuration gives you access to a small set of metadata attributes to select DICOM studies (where "DICOM studies" fit into IDC data model is also discussed in the page) that contain data that meets the search criteria.

Panels on the right side will automatically update based on what you select on the left side!

Selection configuration reflects the active search scope/filters in the Cohort Filters section. You can download all of the studies that match your filters. Below you will see the Cart section. Cart is helpful when selecting data by individual filters is too imprecise, and you want to have more granular control over your selection by selecting specific collections/patients/studies/series.

Filtering results section consists of the tables containing matching content that you can navigate following IDC Data model: first table shows the matching collections, selecting a collection will list matching cases (patients), selection of a case will populate the next table listing matching studies for the patient, and finally selecting a study will expand the final table with the list of series included in the study.

In the following sections of the documentation you will learn more about each of the items we just discussed.

Most of the data in IDC is received from the data collection initiatives/projects supported by US National Cancer Institute. Whenever source images or image-derived data is not in the DICOM format, it is harmonized into DICOM as part of the ingestion.

As of data release v21, IDC sources of data include:

DICOM Segmentation object (SEG) can be identified by SOPClassUID= 1.2.840.10008.5.1.4.1.1.66.4 Unlike most "original" image objects that you will find in IDC, SEG belongs to the family of enhanced multiframe image objects, which means that it stores all of the frames (slices) in a single object. SEG can contain multiple segments, a segment being a separate label/entity being segmented, with each segment containing one or more frames (slices). All of the frames for all of the segments are stored in the PixelData attribute of the object.

If you use the IDC Portal, you can select cases that include SEG objects by selecting "Segmentations" in the "Modality" section () under the "Original" tab . Here is that contains a SEG series.

You can further explore segmentations available in IDC via the "Derived" tab of the Portal by filtering those by specific types and anatomic locations. As an example, will select cases that contain segmentations of a nodule.

In this section we discuss derived DICOM objects, including annotations, that are stored in IDC. It is important to recognize that, in practice, annotations are often shared in non-standard formats. When IDC ingests a dataset where annotations are available in such a non-standard representation, those need to be harmonized into a suitable DICOM object to be available in IDC. Due to the complexity of this task, we are unable to perform such harmonization for all of the datasets. If you want to check if there are annotations in non-DICOM format available for a given collection, you should locate the original source of the data, and examine the accompanying documentation for available non-DICOM annotations.

As an example, the collection is available in IDC. If you mouse over the name of that collection in the IDC Portal, the tooltip will provide the overview of the collection and the link to the source.

SELECT

structureSetROISequence.ROIName AS ROIName,

COUNT(DISTINCT(SeriesInstanceUID)) AS ROISeriesCount

FROM

`bigquery-public-data.idc_current.dicom_all`

CROSS JOIN

UNNEST (StructureSetROISequence) AS structureSetROISequence

WHERE

SOPClassUID = "1.2.840.10008.5.1.4.1.1.481.3"

GROUP BY

ROIName

ORDER BY

ROISeriesCount DESCMetadata describing the segments is contained in the SegmentSequence of the DICOM object, and is also available in the BigQuery table view maintained by IDC in the bigquery-public-data.idc_current.segmentations BigQuery table. That table contains one row per segment, and for each segment includes metadata such as algorithm type and structure segmented.

We recommend you use one of the following tools to interpret the content of the DICOM SEG and convert it into alternative representations:

dcmqi: open source DCMTK-based C++ library and command line converters that aim to help with the conversion between imaging research formats and the standard DICOM representation for image analysis results

highdicom: high-level DICOM abstractions for the Python programming language

DCMTK: C++ library that provides API abstractions for reading and writing SEG objects

Tools referenced above can be used to 1) extract volumetrically reconstructed mask images corresponding to the individual segments stored in DICOM SEG; 2) extract segment-specific metadata describing its content; 3) generate standard-compliant DICOM SEG objects from research formats.

# get the viewer URL for a random study that

# contains SEG modality

SELECT

ANY_VALUE(CONCAT("https://viewer.imaging.datacommons.cancer.gov/viewer/", StudyInstanceUID)) as viewer_url

FROM

`bigquery-public-data.idc_current.dicom_all`

WHERE

StudyInstanceUID IN (

# select a random DICOM study that includes a SEG object

SELECT

StudyInstanceUID

FROM

`bigquery-public-data.idc_current.dicom_all`

WHERE

SOPClassUID = "1.2.840.10008.5.1.4.1.1.66.4"

ORDER BY

RAND()

LIMIT

1)all DICOM files from the public collections are mirrored in IDC

a subset of digital pathology collections and analysis results harmonized from vendor-specific representation (as available from TCIA) into DICOM Slide Microscopy (SM) format

Childhood Cancer Data Initiative (CCDI) (ongoing)

digital pathology slides harmonized into DICOM SM

The Cancer Genome Atlas (TCGA) slides harmonized into DICOM SM

Human Tumor Atlas Network (HTAN)

release 1 of the HTAN data harmonized into DICOM SM

National Library of Medicine Visible Human Project

v1 of the Visible Human images harmonized into DICOM MR/CT/XC

Genotype-Tissue Expression Project (GTex)

digital pathology slides harmonized into DICOM SM

The list of all of the IDC collections is available in IDC Portal here: https://portal.imaging.datacommons.cancer.gov/collections/.

Whenever IDC replicates data from a publicly available source, we include the reference to the origin:

from the IDC Portal Explore page, click on the "i" icon next to the collection in the collections list

source_doi metadata column contains Digital Object Identifier (DOI) at the granularity of the individual files and is available both via python idc-index package (see this tutorial on how to access it) and BigQuery interfaces

Check out Data release notes for information about the collections added in the individual IDC data releases.

Simplified workflow for IDC data ingestion is summarized in the following diagram.

You will also find the link to the source in the list of collections available in IDC.

Finally, if you select data using SQL, you can use the source_DOI and/or the source_URL column to identify the source of each file in the subset you selected (learn more about source_DOI, licenses and attribution in the part 3 of our Getting started tutorial).

For the collection in question, the source DOI is https://doi.org/10.7937/e4wt-cd02, and on examining that page you will see a pointer to the CSV file with the coordinates of the bounding boxes defining regions containing lesions.

Non-standard annotations are not searchable, usually are not possible to visualize in off-the-shelf tools, and require custom code to interpret and parse. The situation is different for the DICOM derived objects that we discuss in the following sections.

In IDC we define "derived" DICOM objects as those that are obtained by analyzing or post-processing the "original" image objects. Examples of derived objects can be annotations of the images to define image regions, or to describe findings about those regions, or voxel-wise parametric maps calculated for the original images.

Although DICOM standard provides a variety of mechanisms that can be used to store specific types of derived objects, most of the image-derived objects currently stored in IDC fall into the following categories:

voxel segmentations stored as DICOM Segmentation objects (SEG)

segmentations defined as a set of planar regions stored as DICOM Radiotherapy Structure Set objects (RTSTRUCT)

quantitative measurements and qualitative evaluations for the regions defined by DICOM Segmentations, those will be stored as a specific type of DICOM Structured Reporting (SR) objects that follows DICOM SR template TID 1500 "Measurements report" (SR-TID1500)

The type of the object is defined by the object class unique identifier stored in the SOPClassUID attribute of each DICOM object. In the IDC Portal we allow the user to define the search filter based on the human-readable name of the class instead of the value of that identifier.

You can find detailed descriptions of these objects applied to specific datasets in TICA in the following open access publications:

Fedorov, A., Clunie, D., Ulrich, E., Bauer, C., Wahle, A., Brown, B., Onken, M., Riesmeier, J., Pieper, S., Kikinis, R., Buatti, J. & Beichel, R. R. DICOM for quantitative imaging biomarker development: a standards based approach to sharing clinical data and structured PET/CT analysis results in head and neck cancer research. PeerJ 4, e2057 (2016). https://peerj.com/articles/2057/

Fedorov, A., Hancock, M., Clunie, D., Brochhausen, M., Bona, J., Kirby, J., Freymann, J., Pieper, S., J W L Aerts, H., Kikinis, R. & Prior, F. DICOM re-encoding of volumetrically annotated Lung Imaging Database Consortium (LIDC) nodules. Med. Phys. (2020). doi:10.1002/mp.14445

Visual Studio Code installed on your computer

A GCP VM you want to use for code development is up and running

Run the following command to populate SSH config files with host entries for each VM instance you have running

If the previous step completed successfully, you should see the running VMs in the Remote Explorer of VS Code, as in the screenshot below, and should be able to open a new session to those remove VMs.

Note that the SSH configuration may/will change if you restart your VM. In this case you will need to re-configure (re-run step 2 above).

If you would like to access IDC data via DICOMweb interface, you have two options:

IDC-maintained DICOM store available via proxy

DICOM store maintained by Google Healthcare

In the following we provide details for each of those options.

This store contains all of the data for the current IDC data release. It does not require authentication and is available via the following DICOMweb URL of the proxy (you can ignore the "viewer-only-no-downloads" part in the URL, it is a legacy constraint that is no longer applicable).

DICOMweb URL:

Limitations:

since all requests go through the proxy before reaching the DICOM store, you may experience reduced performance as compared to direct access you can achieve using the store described in the following section

there are per-IP and overall daily quotas, as described in IDC , that may not be sufficient for your use case

This store replicates all of the data from the idc-open-data bucket, which contains most of the data in IDC (learn more about the organization of data in IDC buckets from ).

DICOMweb URL (note the store name includes the IDC data release version that corresponds to its content: idc-store-v21):

This DICOM store is documented in .

Limitations:

most, but not all of the IDC data is available in this store

authentication with a Google account is required (anyone signed in with a Google account can access this interface, no whitelisting is required!)

since this DICOM store is not maintained directly by the IDC team, it may lag behind the latest IDC release in content in the future

Check out and the accompanying Colab notebook to learn more.

TL;DR: as of IDC v21, it is 95.89% of all of the DICOM series available in IDC (IDC-maintained DICOM store has all of the 100%).

Google Healthcare maintained DICOM store contains the latest versions of the DICOM series stored in the idc-open-data Google Storage bucket (see for details on buckets organization).

You can get the exact number of DICOM series in each of the buckets with the following python code (before running it, do pip install --upgrade idc-index):

As of IDC v21, the result of running the code above is the following, showing that 95.89% of DICOM series in IDC are available from the Google Healthcare maintained DICOM store (IDC-maintained DICOM store has all of the 100%).

TL;DR: our goal is to have the two stores in sync within 1-2 weeks of each IDC data release.

The DICOM store maintained by IDC is updated by the IDC team with each new release.

The DICOM store maintained by Google Healthcare is populated after the release. We hope to have that done within 1-2 weeks after the IDC release. As a new release of IDC data is out, there will be a new DICOM store maintained by Google Healthcare, and the connection to the IDC release version will be indicated in the store name. I.e., when IDC v22 is released, whenever you are able to access https://healthcare.googleapis.com/v1/projects/nci-idc-data/locations/us-central1/datasets/idc/dicomStores/ idc-store-v22/dicomWeb , it is expected to be in sync.

This section contains various pointers that may be helpful when working with Google Colab.

Google Colaboratory, or “Colab” for short, is a product from Google Research. Colab allows anybody to write and execute arbitrary python code through the browser, and is especially well suited to machine learning, data analysis and education. More technically, Colab is a hosted Jupyter notebook service that requires no setup to use, while providing free access to computing resources including GPUs.

IDC Colab example notebooks are maintained in this repository:

Notebook demonstrating deployment and application of abdominal structures segmentation tool to IDC data, developed for the course:

, contributed by , Mayo Clinic

, contributed by , Mayo Clinic

Notebooks contributed by , ISB-CGC, demonstrating the utility of BigQuery in correlative analysis of radiomics and genomics data:

Colab limitations:

Transferring data between Colab and Google Drive:

Potentially interesting sources of example notebooks:

IDC relies on DICOM for data modeling, representation and communication. Most of the data stored in IDC is in DICOM format. If you want to use IDC, you (hopefully!) do not need to become a DICOM expert, but you do need to have a basic understanding of how DICOM data is structured, and how to transform DICOM objects into alternative representations that can be used by the tools familiar to you.

This section is not intended to be a comprehensive introduction to the standard, but rather a very brief overview of some of the concepts that you will need to understand to better use IDC data.

As discussed in , the main mechanism for accessing the data stored in IDC is by using the storage buckets that contain individual files indexed through other interfaces. Each of the files in the IDC-maintained storage buckets encodes a DICOM object. Each DICOM object is a collection of data elements or attributes. Below is an example of a subset of attributes in a DICOM object, as generated by the IDC OHIF Viewer (which can be toggled by clicking the "Tag browser" icon in the IDC viewer toolbar):

The standard defines constraints on what kind of data each of the attributes can contain. Every single attribute defined by the standard is listed in the , which defines those constraints:

Value Representation (VR) defines the type of the data that data element can contain. There are 27 DICOM VRs, and they are defined in .

Value Multiplicity (VM) defines the number of items of the prescribed VR that can be contained in a given data element.

What attributes are included in a given object is determined by the type of object (or, to follow the DICOM nomenclature, Information Object). is dedicated to the definitions (IODs) of those objects.

It is critical to recognize that while all of the DICOM files at the high level are structured exactly in the same way and follow the same syntax and encoding rules, interpretation of the content of an individual file is dependent on the specific type of object it encodes!

How do you know what object is encoded in a given file (or instance of the object, using the DICOM lingo)? For this purpose there is an attribute SOPClassUID that uniquely identifies the class of the encoded object. The content of this attribute is not easy to interpret, since it is a unique identifier. To map it to the specific object class name, you can consult the complete list of object classes available in .

When you use the IDC portal to build your cohort, unique identifiers for the object classes are mapped to their names, which are available under the "Object class" group of facets in the search interface.

A somewhat related attribute that hints at the type of object is Modality, which is defined by the standard as "Type of equipment that originally acquired the data used to create the images in this Series", and is expected to take one of the values from . However, Modality is not equivalent to SOPClassUID, and should not be used as a substitute. As an example it is possible that data derived from the original modality could be saved as a different object class, but keep the value of modality identical.

Once a manifest has been created, typically the next step is to load the files onto a VM for analysis, and the easiest way to do this is to create your manifest in a BigQuery table and then use that to direct the file loading onto a VM. This guide shows how this can be done,

The first step is to export a file manifest for a cohort into BigQuery. You will want to copy this table into the project where you are going to run your VM. Do this using the Google BQ console, since the exported table can be accessed only using your personal credentials provided by your browser. The table copy living in the VM project will be readable by the service account running your VM.

Start up your VM. If you have many files, you will want to speed the loading process by using a VM with multiple CPUs. Google describes the various , but is not very specific about ingress bandwidth. However, in terms of published egress bandwidth, the larger machines certainly have more. Experimentation showed that an n2-standard-8 (8 vCPUs, 32 GB memory) machine could load 20,000 DICOM files in 2 minutes and 32 secconds, using 16 threads on 8 CPUs. That configuration reached a peak throughput of 68 MiB/s.

You also need to insure the machine has enough disk space. One of the checks in the script provided below is to calculate the total file load size. You might want to run that portion of the script and resize the disk as needed before actually doing the load.

performs the following steps:

Performs a query on the specified BigQuery manifest table and creates a local manifest file on your VM.

Performs a query that maps the GCS URLs of each file into DICOM hierarchical directory paths, and writes this out as a local TSV file on your VM.

Performs a query that calculates the total size of all the downloads, and reports back if there is sufficient space on the filesystem to continue.

To install the code on your VM and then setup the environment:

You then need to customize the settings in the script:

Finally, run the script:

Imaging Data Commons is being developed by a team of engineers and imaging scientists with decades of experience in cancer imaging informatics, cloud computing, imaging standards, security, open source tool development and data sharing.

Our team includes the following sites and project leads:

Brigham and Women's Hospital, Boston, MA, USA (BWH)

Andrey Fedorov, PhD, and Ron Kikinis, MD - Co-PIs of the project

Depending on whether you would like to download data interactively or programmatically, we provide two recommended tools to help you.

is a python package designed to simplify access to IDC data. Assuming you have Python installed on your computer (if for some reason you do not have Python, you can check out legacy download instructions ), you can get this package with pip like this:

Once installed, you can use it to explore, search, select and download corresponding files as shown in the examples below. You can also take a look at a short tutorial on using idc-index

An IDC manifest may include study and/or series GUIDs that can be resolved to the underlying DICOM instance files in GCS. Such use of GUIDs in a manifest enables a much shorter manifest compared to a list of per-instance GCS URLs. Also, as explained below, a GUID is expected to be resolvable even when the data which it represents has been moved.

In IDC, we use the term GUID

$ gcloud compute config-sshSELECT

*

FROM

`canceridc-data.idc_views.segmentations`

LIMIT

10Google Colab Tips for Power Users: https://amitness.com/2020/06/google-colaboratory-tips/

Mounting GCS bucket using gcsfuse: https://pub.towardsai.net/connect-colab-to-gcs-bucket-using-gcsfuse-29f4f844d074

Almost-free Jupyter Notebooks on Google Cloud: https://www.tensorops.ai/post/almost-free-jupyter-notebooks-on-google-cloud

Deepa Krishnaswamy, PhD

Katie Mastrogiacomo

Maria Loy

Institute for Systems Biology, Seattle, WA, USA (ISB)

David Gibbs, PhD - site PI

William Clifford, MS

Suzanne Paquette, MS

General Dynamics Information Technology, Bethesda, MD, USA (GDIT)

David Pot, PhD - site PI

Fabian Seidl

Fraunhofer MEVIS, Bremen, Germany (Fraunhofer MEVIS)

André Homeyer, PhD - site PI

Daniela Schacherer, MS

Henning Höfener, PhD

Massachusetts General Hospital, Boston, MA, USA (MGH)

Chris Bridge, DPhil - site PI

Radical Imaging LLC, Boston, MA, USA (Radical Imaging)

Rob Lewis, PhD - site PI

Igor Octaviano

PixelMed Publishing, Bangor, PA, USA (PixelMed)

David Clunie, MB, BS - site PI

Isomics Inc, Cambridge, MA, USA (Isomics)

Steve Pieper, PhD - site PI

Oversight:

Leidos Biomedical Research

Ulrike Wagner - project manager

Todd Pihl - project manager

National Cancer Institute

Erika Kim - federal lead

Granger Sutton - federal lead

We are grateful to the following individuals who contributed to IDC in the past, but are no longer directly involved in the development of IDC.

William Longabaugh, MS (ISB)

George White (ISB)

Ilya Shmulevich, PhD (ISB)

Poojitha Gundluru (GDIT)

Prema Venkatesun (GDIT)

Chris Gorman, PhD (MGH)

Pedro Kohler (Radical Imaging)

Hugo Aerts, PhD (BWH)

Cosmin Ciausu, MS (BWH)

Keyvan Farahani (NCI)

Markus Herrmann (MGH)

Davide Punzo (Radical Imaging)

James Petts (Radical Imaging)

Erik Ziegler (Radical Imaging)

Gitanjali Chhetri (Radical Imaging)

Rodrigo Basilio (Radical Imaging)

Jose Ulloa (Radical Imaging)

Madelyn Reyes (GDIT)

Derrick Moore (GDIT)

Mark Backus (GDIT)

Rachana Manandhar (BWH)

Rasmus Kiehl (Fraunhofer MEVIS)

Chad Osborne (GDIT)

Afshin Akbarzadeh (BWH)

Dennis Bontempi (BWH)

Vamsi Thiriveedhi (BWH)

Jessica Cienda (GDIT)

Bernard Larbi (GDIT)

Mi Tian (ISB)

As described in the Data Versioning section, a UUID identifies a particular version of an IDC data object. There is a UUID for every version of every DICOM instance, series, and study in IDC hosted data. Each such UUID can be used to form a GUID that is registered by the NCI Cancer Research Data Commons (CRDC), and can be used to access the data that defines that object.

This is a typical UUID:

641121f1-5ca0-42cc-9156-fb5538c14355

of a (version of a) DICOM instance, and this is the corresponding CRDC GUID:

dg.4DFC/641121f1-5ca0-42cc-9156-fb5538c14355

A GUID can be resolved by appending it to this URL, which is the GUID resolution service within CRDC: https://nci-crdc.datacommons.io/ga4gh/drs/v1/objects/ . For example, the following curl command:

>> curl https://nci-crdc.datacommons.io/ga4gh/drs/v1/objects/dg.4DFC/641121f1-5ca0-42cc-9156-fb5538c14355

returns:

which is a DrsObject. Because we resolved the GUID of an instance, the access_methods in the returned DrsObject includes a URL at which the corresponding DICOM entity can be accessed.

When the GUID of a series is resolved, the DrsObject that is returned does not include access methods because there are no series file objects. Instead, the contents component of the returned DrsObject contains the URLs that can be accessed to obtain the DrsObjects of the instances in the series.

Thus, we see that when we resolve dg.4DFC/cc9c8541-949d-48d9-beaf-7028aa4906dc, the GUID of the series containing the instance above:

curl -o foo https://nci-crdc.datacommons.io/ga4gh/drs/v1/objects/dg.4DFC/cc9c8541-949d-48d9-beaf-7028aa4906dc

we see that the contents component includes the GUID of that instance as well as the GUID of another instance:

Similarly, the GUID of a DICOM study resolves to a DrsObject whose contents component consists of the GUIDs of the series in that study.